During the past three years, I have spent a good portion of my time testing, planning, designing, and then migrating our DC network from Cisco FabricPath and Classic Ethernet environments to VXLAN BGP/EVPN. And simultaneously, from a hierarchical classic two-tier architecture to a more modern Clos 400Gb-based topology.

The migration is not yet 100% completed, but it is well underway. And I have gained significant experience on the subject, so I think it’s time to share my knowledge and experiments with our community.

This is my first post on this topic, dedicated to the “why”. Stay tuned for future articles on this theme.

The context before the migration

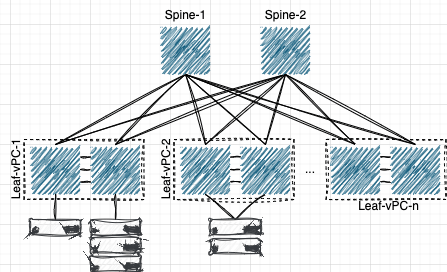

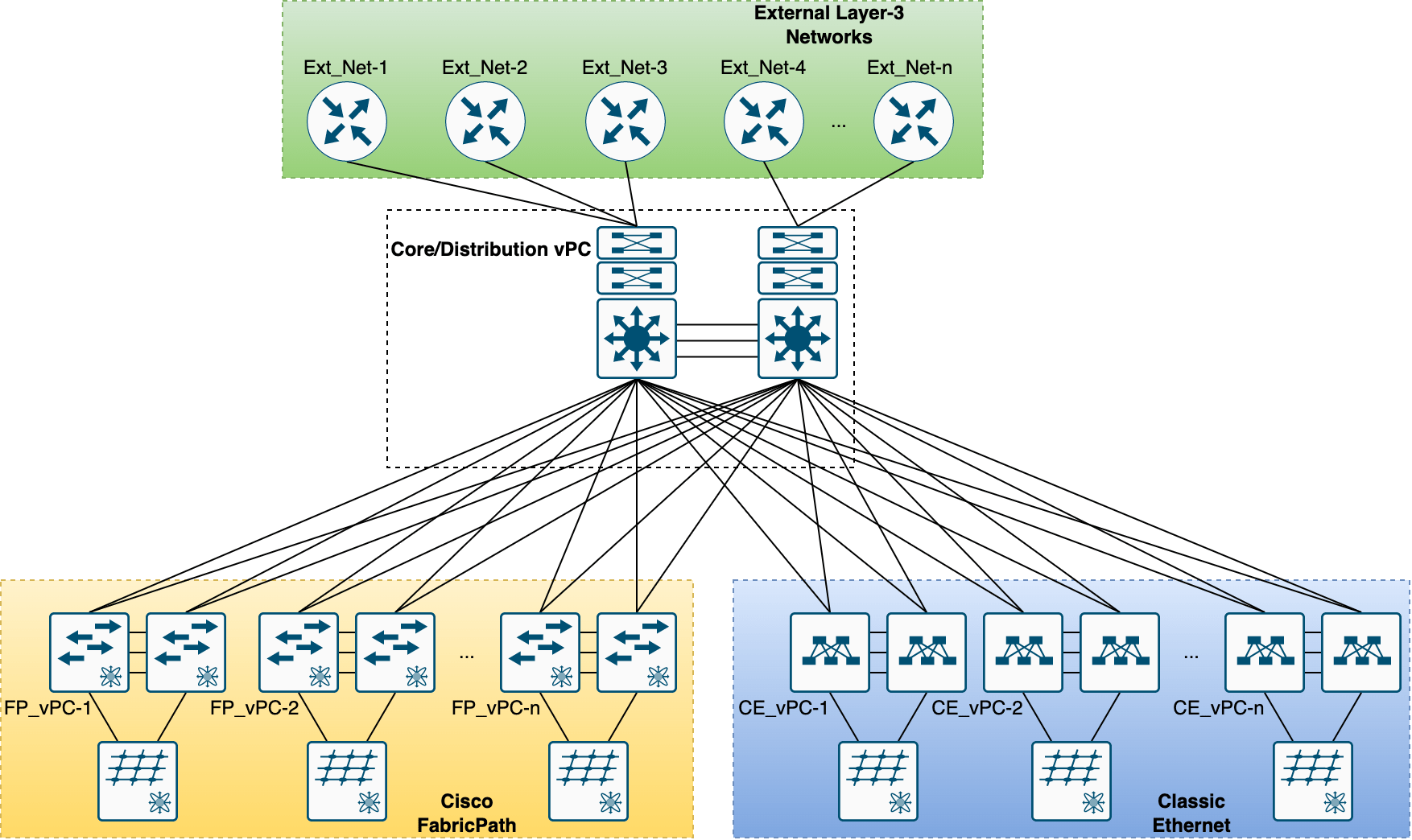

In 2019, our data-center network was based on a hierarchical model consisting of a pair of Cisco Nexus 7700-series used as collapsed core/distribution, in vPC. And many access/ToR switches installed by pairs, also in vPC mode. Some of the ToR switches from the Cisco Nexus 5600-series were using FabricPath technology. The others, from the more recent Cisco Nexus 9300-series, on which FabricPath is not available, were using what is now called “Classic Ethernet” or CE. By using vPC on the entire network, we had all the links active. With, on top of that, a few hundred VLANs and some VRFs, and a cluster of firewalls to segregate the traffic. I think this was a standard environment for small to medium-sized data-centers a few years ago.

Here is a simplified design:

On top of that, to make our daily operations more challenging, we had other networks connected in layer-3 to our core switches, to connect the different clusters we also operate. Some of these networks use proprietary technology, such as one for our future flagship supercomputer, so it requires L3 gateways. Others were already using VXLAN BGP/EVPN, like, for example, a network based on Mellanox 100Gb switches and Cumulus-Linux OS (both are part of NVIDIA today), so we separated the network fabrics with layer-3 connectivity.

Why migrate to VXLAN & CLOS Architecture?

The main points that motivated us to make evolve our DC to VXLAN BGP/EVPN and therefore a Clos topology were the scalability and the elimination of spanning-tree within the fabric.

Scalability

With a hierarchical two-tier architecture, we were forced to connect all L3 links on the core/distribution switches. The alternative would be to use dedicated VLANs and SVIs to connect the L3 links on the access switches, but we wanted to avoid this as much as possible. Mainly for STP reasons, but also because routing through SVIs on a vPC cluster is not supported with all nexus 7700 line cards. The consequence of this was that our core/distribution switches had almost no more free ports and all line-card slots were full. And replacing them with larger modular switches while keeping the same architecture would only push the same issues back a few months.

Then, the scalability and flexibility of the Clos topology versus the hierarchical model are incomparable. Especially with the vPC technology limited to two devices as core devices in the hierarchical model. With a Clos topology, we are not limited anymore to two spines. We can add the number of spines to increase the total available bandwidth across the fabric. And this would also decrease the impact in case of a spine goes down. In the same way, we can increase the number of leafs to increase the available ports. And all this can be done without impacting the production network. Furthermore, if we need to scale even more, we can build new leafs-spine fabric and connect it with the existing one using super-spines. In the end, the scalability of this topology is almost infinite.

Remove STP from the fabric

The second reason was to get rid of STP inside the fabric. As I wrote above, by using Classic Ethernet in combination with vPC, or by using FabricPath, we had all the links active and theoretically fewer STP issues. Despite this, we always had long discussions and hesitations when we had to connect parts of another vendor’s networks to ours: in layer-2 we may introduce STP problems. In layer-3, we needed anyway to use layer-2 to reach SVIs on the core switches, so again possible STP issues. The only “safe” solution was to connect these networks in Layer-3 directly on the core switches. By using VXLAN BGP/EVPN and a Clos topology, the STP area is limited to the links between the leafs and the servers. And in addition to that, the possibility to connect external networks directly on the leafs is also a big improvement in scalability and flexibility.

400Gb Ethernet

In addition, the Cisco Nexus 77k platform is limited to 100Gb Ethernet, while in 2019, the 400Gb Ethernet was starting to arrive. In the HPC world, the amounts of data are massive: the east-west and north-south data transfers are huge. So, the previous upgrades from 40Gb to 100Gb on the inter-switch links were done very early, because it was a real need. Now, the next upgrade from 100Gb to 400Gb is more than just a lifecycle evolution to be achieved over time, it is a critical requirement to continue to support the increase of data transfers within the center. So, this is another argument for moving away from our n77k platforms and building a new 400Gb-based network.

And more…

Many other reasons came into play in this choice, such as the fact of doing BGP between our switches and our Kubernetes nodes. The future EoL of our Nexus 5600-series switches and the FabricPath technology. As well as the probable future EoL of the Cisco Nexus 2k-series/FEX. Thus, the overall scalability of the network because we will have to replace our FEXes sooner or later. The ease of operating tenants/VRF with VXLAN BGP/EVPN, including the inter-VRF route leaking with route-target import/export maps and rules. Using a non-proprietary technology, even if we all know that each vendor adds its features on top. But we may be able to add different network vendors to the fabric in a second step. Get rid of physical links for the vPC peer-links by using the vPC Fabric Peering technology. And I am certainly forgetting many other points.

All these points contributed to the decision to migrate to a VXLAN BGP/EVPN network based on a Clos topology.

Did you like this article? Please share it…